the bullet hole misconception

on escaping the present to invent the future

This is an extended text version of a talk first presented at Voxxed Days Belgrade in October 2017 and a sequel to my previous talk, the lost medium. A video recording is embedded below.

A terrific talk on the humane use of technology.

To build the future, we must escape the present, or, “The bullet hole misconception”. Thanks for a great talk!

The bullet hole misconception — Still an excellent talk.

An excellent talk on the bullet hole misconception and the limitations of Big Data

Good morning.

When I flew over to give this talk, I wasn’t worried about my plane falling out of the sky, not even after several high-profile plane crashes in recent months and years. But accidents do play on the minds of anyone who is scared of flying.

Mostly this worry is needless. Flying is shown by statistics to be the safest mode of transport. But what if it wasn’t? What if every 100th plane crashed? Or every 10th? Or how about every 5th?

What if the survival rate of boarding an airplane was set by a flip of a coin? Would you still board your flight? And what if a 50% rate of survival was considered lucky?

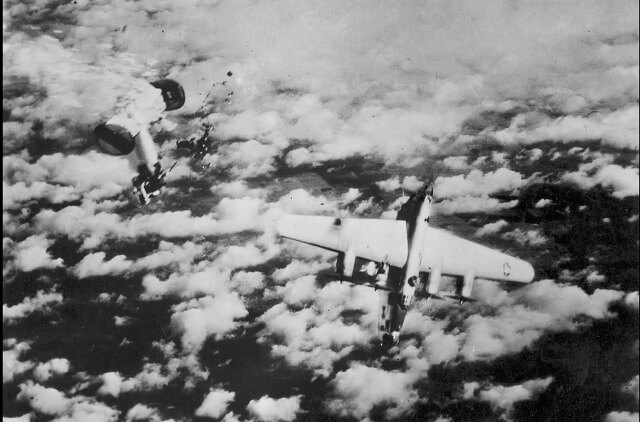

This is what you faced if you were a crew member of a World War II bomber. You flew long missions that penetrated deep into enemy territory. In anxious anticipation all eyes searched the skies for your opponents armed with machine guns and rockets.

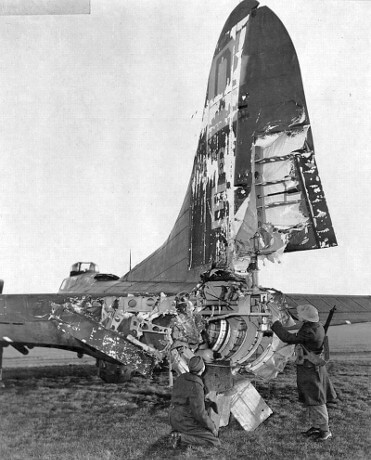

Deadly accurate flak tore through the bombers. More often than not friendly bombs dropped from above, or airplanes collided. And when things went wrong on your plane, the options were limited. You couldn’t leave your formation and there was little first aid available. It was so limited that injured crew members were often thrown off the plane in the hope the enemy would find them, patch them up and send them to a POW camp.

An airmen’s tour of duty was set to 25 missions and later increased to 30. It is estimated that the average airmen had only a one-in-four chance of completing his tour of duty.

The successes were purchased at a terrible cost. Of every 100 airmen, 45 were killed, 6 were seriously wounded, 8 became Prisoners of War and only 41 escaped unharmed — at least physically. Of those who were flying at the beginning of the war, only 10 percent survived. It is a loss rate comparable only to the worst slaughter of the First World War trenches.

Nevertheless the Allied command insisted that bombing was critical to the success of the war, so all this destruction lead to a most pressing question: How do you cut the number of casualties?

The bomber command came up with a solution: Bombers should be more heavily armed, to reduce the damage brought by flak and enemy fighter planes. But of course you can’t arm the whole airplane like a tank or it won’t take off. So the challenge was where to put the additional armor.

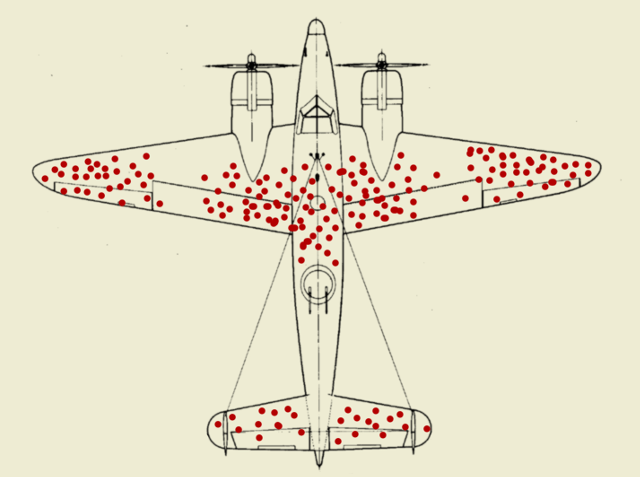

As the planes returned from their missions, they counted up all the bullet holes on various parts. The planes showed similar concentrations of damage in three areas:

The fuselage,

the outer wings

and the tail.

The obvious answer was to add armor to all of these heavily damaged areas.

Obvious but wrong.

Before the planes were modified, Abraham Wald, a Hungarian-Jewish statistician reviewed the data. Wald, who fled Nazi-occupied Austria and worked with other academics to help the war effort, pointed out a critical flaw in the analysis: The command had only looked at airplanes which had returned.

Wald explained that if a plane made it back safely with, say, bullet holes in the fuselage, it meant those bullet holes weren’t very dangerous. Armor was needed on the sections that, on average, had few bullet holes such as the cockpit or the engines. Planes with bullet holes in those parts never made it back. That’s why you never saw bullet holes on sensitive parts of the planes that did return.

This finding helped to turn the tide of the war.

Now I hope a question is bubbling up in your mind. If the stakes were so high, and we’re talking about the fate of the planet or at least the fate of a nation, how could they make such a stupid mistake? And could I be making this sort of mistake — in my profession or life? The answer is: Yes, you are.

It’s a bias that arises frequently in all kinds of contexts. And once you’re familiar with it, you’ll be primed to notice it wherever it’s hiding. It made me want to know how something so obvious is so often missed by us.

And the more I thought about this, the more examples I found. What surprised me was that there never was a problem of information scarcity. Just like the World War II bombers, it is never a lack of information that keeps us from solving problems. In fact, the information is almost always available but it is all about how we use information to solve a problem. I want to highlight this argument with a peculiar story.

Johannes Gutenberg invented the printing press in the mid 1400s by converting an old wine press into a printing machine with movable type. Thirty or forty years later, there were presses in over a hundred cities in six different countries. By 1500, more than eight million books had been printed, all of them filled with information that had previously been unavailable to the average person. Books on law, politics, agriculture, metallurgy, botany, linguistics and so on.

However, the real information revolution did not start with the invention of the printing press. The books printed then had little in common with the books we see today. For example page numbering wasn’t a tool for readers, but a guide for those who wrote and produced books. It was used in early Latin manuscripts to ensure individual sheets were collated in the right order. We can find many examples of manuscripts where only one side of a page carries a number — if at all.

Only by the mid-sixteenth century — almost a century after the invention of the printing press — printers began to experiment with new formats. One of the most important was the use of Arabic numerals to number pages. Once you number the pages in books you change their function dramatically. Pagination allowed indexing, annotation and cross-referencing. And it was the base for section heads, paragraphing, chapters, running heads and all the other things we expect from a book these days. By the late sixteenth century, printed books had a typographic form that resembled what it is today.

The information age was not possible before we had page numbers. Think about this for a moment. We could not reference an argument or a section by saying “it’s in there somewhere”. And yet it’s a trivial thing to us to number pages and obvious to all of you, right? Apparently it took us close to a century to figure this out.

The point of these two stories, about the bomber and pagination, tell us that we never have a fully objective point of view. And even when all the information we need is available, our vision is still skewed, biased and subjective. We don’t see things as they are, we see them as we expect to see them.

The conclusion — whether we like it or not — is that there are many things we are not able to see. Maybe not now, maybe only soon or maybe never.

With this in mind, let’s take a look at something else, our computers. Is there something obvious we are missing about the role of computers in our lives?

It is easy to get the idea that the current state of the computer world is the climax of our great progress. Attend any tech conference and you’ll be sure to find celebrations of innovative solutions, frameworks or apps that generate, store, transform or distribute data more conveniently, easier and faster than ever. We celebrate technology for freeing us from our work and giving us more time with our families, make us more creative, get us faster from point A to B and make the world a better place.

With all of the recent tech development and innovation we should live in an amazing world. Right? We are automating more and more of our work, becoming wealthier and more connected. And we keep trying to make everything in our environment and life more connected, fast, smooth and compelling, even addicting.

Why then do we feel more short of time, overwhelmed and overworked? Why are people so connected yet feel isolated and lonely? Why do we have FOMO? Fear of missing out. Why are we enclosed in filter bubbles of news and social media attuned to our ideological affinities and lose the ability to have our own opinions? Why are so many people worried about losing their jobs? Why is education in deep confusion about how to educate our children? Why are we spied upon by governments, employers or even schools?

Let’s pose the question differently:

When was the last time using a computer was fun?

The weird hard truth is: Our immersion in technology is quietly reducing human interactions.

From an engineer’s mindset, human interaction is often perceived as complicated, inefficient, noisy and slow. Part of making something frictionless is getting the human part out of the way. The consequences of this however are dire and visible for everyone. I want to show you a few examples:

- While waiting for the next train we stare at our devices and avoid talking to the person next to us, possibly missing out on a genuine connection.

- We use online ordering and delivery services to avoid sitting alone in a restaurant, because apparently that would be weird and other guests might wonder why we don’t have any friends.

- We’re aiming towards driverless cars, which theoretically should drive more safely than humans, while at the same time eliminating taxi, truck and delivery drivers. Meanwhile ride-hailing apps free us from telling the driver the address or preferred route, relieving us from having a conversation with them.

- We use online stores to avoid awkward conversations with shop assistants when a certain piece doesn’t fit the way we want it to or when we don’t buy an item after trying it on.

- We use social media where everybody seems to have a perfect life in order to avoid the harsh conversations of the real life, increasing envy and unhappiness at the same time.

- We use video games and virtual reality to lose ourselves in virtual worlds where we don’t have to talk to our friends. And yes, some games allow you to interact with friends or strangers, but the interaction itself is still virtual.

- We use robots in our factories, which means no personalities to deal with, no workers agitating for overtime and no illnesses. No liability, healthcare, taxes or social security to take care of.

- We use online dating apps so we don’t have to learn and practice that dating skill and don’t have to be in the uncomfortable situations where yes means no or no means yes. We even seem to lose the competence to say “hi” to a stranger on the street or in a bar — swipe right and bang! — you’re a stud.

- We use digital media providers to get our movies, music and books which offer algorithmic recommendations, so you don’t even have to ask your friends what music they like. Recommendation engines know what you like and will suggest the perfect match or at least perfect for spending the weekend at home alone.

Now I am sure the creators of these solutions only had our best interest in mind and I am not trying to pick on them. But there is a built-in assumption that whatever a computer can do, it should do. And that it is not for us to ask the purpose or limitations. So everyone uses and more importantly everyone is used by computers, for purposes that seem to know no boundaries.

Instead of augmenting humans as imagined by the fathers of early personal computing, our computers have turned out to be mind-numbing consumption devices. What happened to the bicycle for the mind that Steve Jobs envisioned?

The sad and real implication is that our technology is driving us apart. But we humans don’t exist as segregated individuals. We’re social animals, we’re part of networks. Our random accidents and behaviours make life enjoyable. Nevertheless we seem to be farther away than ever.

Tech pundits and companies are trying to make us believe that “software is eating the world”, that they’re “connecting people”, help us to “think different”, that we live in an “always-on world” or the “singularity is near”. I would argue that this is nonsense.

Computer technology has not yet come close to the printing press in its power to generate radical and substantive thoughts on a social, economical, political or even philosophical level. The printing press was the dominant force that transformed the middle ages into a scientific society. Not by just making books more available, but by changing the thought patterns of those who learned to read. The changes in our society brought about by the computer technology in the past 50 years look pale compared to that. We are making computers in all forms available, but we’re far away from generating new thoughts or breaking up thought patterns.

Joseph Weizenbaum, one of the fathers and leading critics of modern artificial intelligence wrote:

The arrival of the Computer Revolution and the founding of the Computer Age have been announced many times. But if the triumph of a revolution is to be measured in terms of the profundity of the social revisions it entrained, then there has been no computer revolution.

Alan Kay, another important figure in the development of early personal computing wrote this:

It looks as though the actual revolution will take longer than our optimism suggested, largely because the commercial & educational interests and modes of thought have frozen personal computing pretty much at the “imitation of paper, recordings, film and TV” level.

And finally, Konrad Zuse, computer pioneer and inventor of the world’s first programmable computer said this:

The danger of computers becoming like humans is not as great as the danger of humans becoming like computers.

We happily submit ourselves to machines and think that’s all there is. Just look around you — almost everyone is glued to their smartphones.

But our machines or algorithms are not becoming more intelligent. If we think that we have it backwards! For our technology to work perfectly, society has to dumb itself down in order to level the playing field between humans and computers. What is most significant about this line of thinking is the dangerous reductionism it represents.

To close the gap between the ease of capturing data and the difficulties of the human experience, we make ourselves perfectly computer-readable. We become digits in a database, dots in a network, symbols in code and therefore less human.

The computer claims sovereignty over the whole range of human experience and supports its claim by showing that it thinks better than we can. The fundamental metaphorical message of the computer is that we become machines. Our nature, our biology, our emotions and our spirituality become subjects of second order.

For all this to work we assume that humans are in some ways like machines. From this we move to the proposition that humans are little else but machines. And finally we argue that human beings are machines. Thus it follows that machines become human beings. And from here the jump to a superintelligent artificial intelligence (AI) is trivial.

But the truth is that machines can’t be humans and vice-versa. Computers work with concrete symbols, but feelings, experiences and sensations sometimes cannot be put into symbols. Nevertheless they mean something to us. The computer and AI does not and cannot lead to a meaning-making, judging and feeling creature, which is what a human being is. Machines cannot feel and, just as important, cannot understand. No doubt machines are able to simulate parts of the human experience or might be better than us at playing chess or Go, flying a plane, driving a car or finding patterns in a muddle of data. Nevertheless they lack a fundamental understanding of the thing they’re doing. Regardless of the enormous progress in machine learning and AI in the last years and the success in the long term, we have to take this fallacy into account.

Leaving aside the viability and philosophical questions of AI, the issue mostly comes down to a pragmatical one. Whether or not AI succeeds in the long term, it will nevertheless be developed and deployed with uncompromising efforts. But if we subdue and submit ourselves to semi-intelligent programs, failing to plan for the robot apocalypse will be the least of our worries. We’re already starting to live our lives inside machines. Our smartphones and social media are the prime example for this. When machines become a significant part of our everyday lives, the tragedy will be that machines remain as ordinary and dull as they are today and overtake us anyway.

So by trying to improve computers we’re dumbing down our society. We have to address this misconception.

Instead of using technology to replace people, we should use it to augment ourselves to do things that were previously impossible, to help us make our lives better. That is the sweet spot of our technology. We have to accept human behaviour the way it is, not the way we would wish it to be.

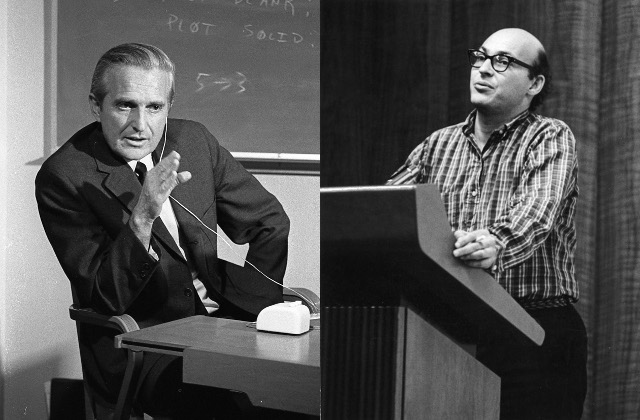

Nothing contrasts this argument better than a conversation in the 1950s between Marvin Minsky, one of the fathers of artificial intelligence and Douglas Engelbart, one of the fathers of personal computing and who thought we have to assist rather than replace humans. Minsky declared:

We’re going to make machines intelligent. We are going to make them conscious!

To which Engelbart reportedly replied:

You’re going to do all that for the machines? What are you going to do for the people?

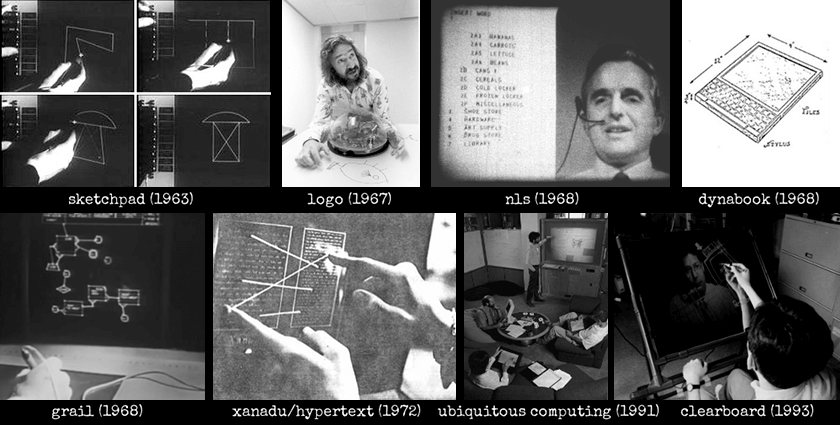

We somehow lost the idea of what we’re going to do for the people, an argument I made last year in my talk: The lost medium. It’s not that these ideas aren’t out there. Have a look at this short clip showcasing Sketchpad, a drawing program that helped to define how we interact with computers graphically today.

Remember, this is something from 1964 and we hear things like

The old way solving problems with the computer has been to understand the problem very very well and to write out in detail all the steps that it takes to solve a problem.

or

If you for example in the old days made so much as one mistake of a comma in the wrong place the entire program would hang up.

This was from 1964. Doesn’t that sound alarmingly familiar to programming today?

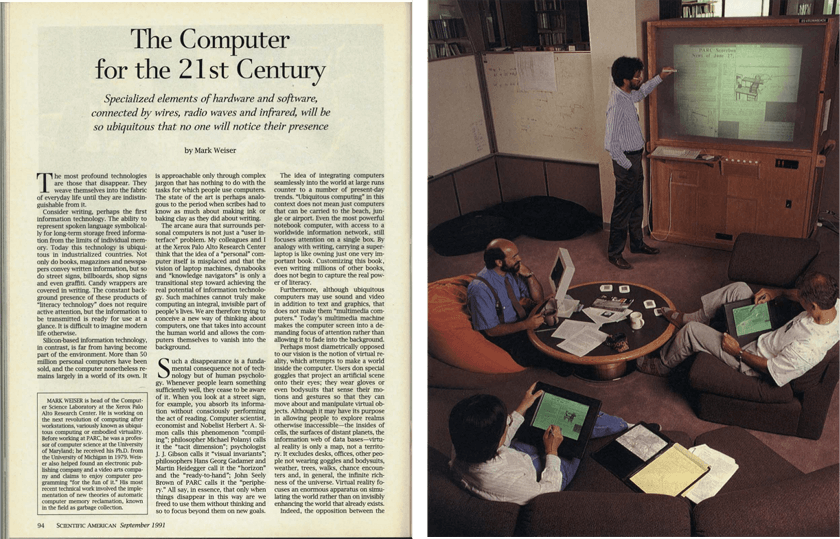

Or Mark Weiser, who was thinking a lot about how computers could fit the human environment, instead of forcing humans to enter theirs. Thirty years ago he and his team at XEROX Parc were thinking about how to integrate computers in our environment and enhance our abilities there, instead of becoming slaves to our own devices. He believed that this would lead to an era of calm technology, which rather than panicking us, would help us focus on what really matters to us.

It’s not that these ideas have not been explored or found to be unpractical. We have tons of research projects, prototypes and devices lying around. Last year I made a point of showing some of these in detail. The issue is that they’re slowly but surely forgotten.

In the words of Douglas Engelbart:

These days, the problem isn’t how to innovate; it’s how to get society to adopt the good ideas that already exist.

We tend to adapt a worse is better or done is better than perfect attitude. This is not the best way to do most things because it drives us away from figuring out what is actually needed. Most importantly these hacks tend to define our new normal, so it becomes really hard to think about the actual issue. Of course this is easier to do and why we’re tempted to choose an increment and say at least it’s a little bit better. But if the threshold isn’t reached, then it is the opposite of a little better, it’s an illusion.

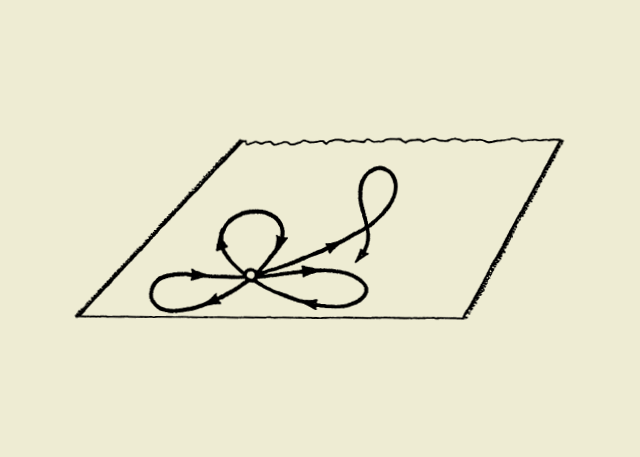

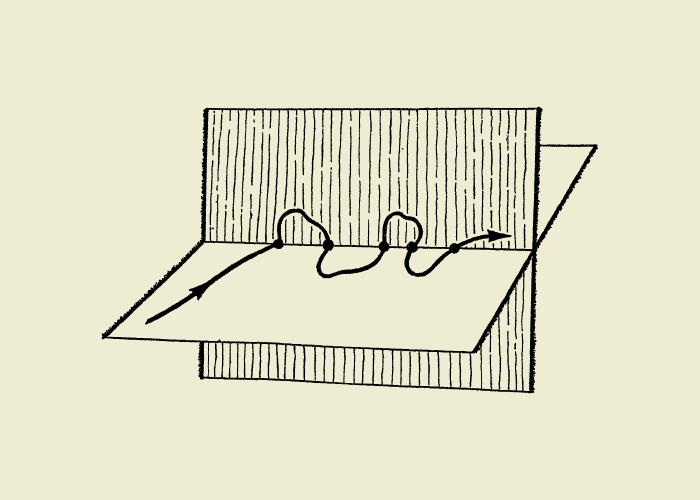

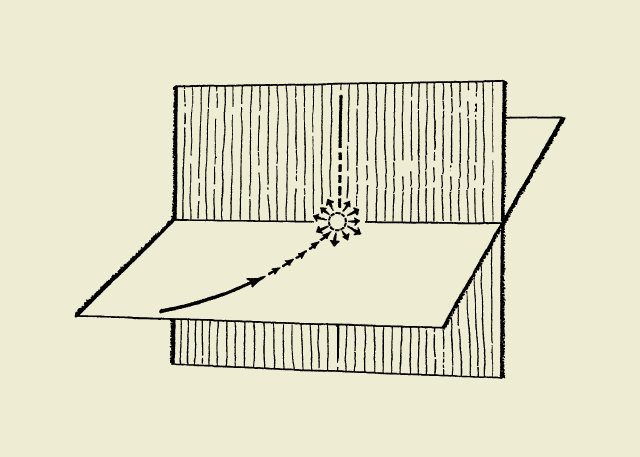

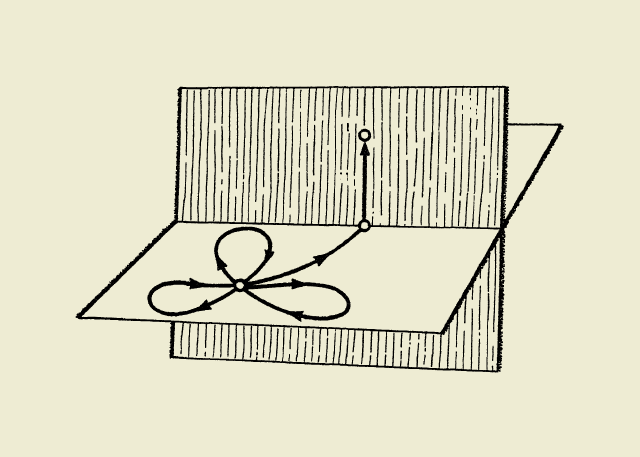

This line of thinking comes from Arthur Koestler, a great novelist who researched creativity and innovation. He said our minds are constrained to think within a given context or system of belief. And in this context we can walk around freely. We can make plans, encounter problems and obstacles, solve them or walk around them. And that is basically all we’re able to do. We accept this plane as reality and our thought patterns stay in this plane because we grew up in it and that’s all there is.

But every once in a while when you’re taking a shower or out jogging you have a little outlaw thought that doesn’t really fit in this plane. We suppress and push it back as a joke or being unpractical.

But sometimes you let these little outlaw thoughts flourish and you find out this little idea is much more interesting when expanded into a different plane — a different context.

When you dip your toes into that new context, three things happen which explain why we’re having trouble making progress:

First, the idea makes perfect sense in the new context, but it doesn’t make sense in the existing one. In the existing plane it’s utter nonsense and so you turn yourself into a crackpot for a little while. That’s one force pulling you back, if you don’t want to be seen as crazy.

Second, when you’re trying to explain this idea to someone else they really have to go through a similar process. This is probably the most difficult thing about real innovation as other people have to learn to see this new context, even though it might be incomplete or absolutely nutty.

And third, this new context is just another plane where we make plans, explore and optimize. And everything starts over again.

This is the process of how we humans innovate.

And here is the problem: If you’re never exposed to new ideas and contexts, if you grow up only being shown one way of thinking about the computer and being told that there are no other ways to think about this, you grow up thinking you know what we’re doing. We have already fleshed out all the details, improved and optimized everything a computer has to offer. We celebrate alleged innovation and then delegate picking up the broken pieces to society, because it’s not our fault — we figured it out already.

Engineering is probably one of the hardest fields to innovate and to be creative in, if you don’t have any other kinds of knowledge to think with. This is because engineering is all about optimizing and you can’t do that without being firmly anchored to the context you’re in. So we grow up with a dogma and it’s really hard to break out of it.

What’s really fascinating here, is that one of the reasons why the early days of computing were so interesting was that nobody back then was a computer scientist.

Everybody who came into computing back then came with a different background, interest and knowledge of other domains. They didn’t know anything about the computer and therefore tried everything they could think of while figuring out how they could leverage it to solve their problems.

So what are talking about here? Well, it is not about optimization, not about disruption, not about just the next thing and not about adding armor to the wrong places on bombers. It is about rotating the point of view.

When I meet people who work at the world’s leading tech companies, I ask them about why they don’t look at the long term consequences of what they do on society? And I ask why they don’t allow radical new ideas that augment human capabilities? Or why their so-called innovation and disruption is merely recycled, old stuff in new clothing? They answer that these problems are hard to solve. But let’s be honest, the Googles and Facebooks and Twitters and virtually every tech company in the world solve similarly difficult technical problems every single day.

I want to suggest that this is not entirely a technological proclamation. Rather, it’s a proclamation about profits and politics.

Which brings up the question of what we, about what you, can do about it:

When you grow up you tend to get told the world is the way it is and your life is just to live your life inside the world. Try not to bash into the walls too much. Try to have a nice family life, have fun, save a little money.

That’s a very limited life. Life can be much broader once you discover one simple fact: Everything around you that you call life, was made up by people that were no smarter than you. And you can change it, you can influence it, you can build your own things that other people can use.

The most important thing is to shake off this erroneous notion that life is there and you’re just gonna live in it, versus embrace it, change it, improve it, make your mark upon it. And however you learn that, once you learn it, you’ll want to change life and make it better, cause it’s kind of messed up, in a lot of ways. Once you learn that, you’ll never be the same again.

I began this talk with a story about World War II bombers and want to end with it. It stunned me that something as obvious as where to put the additional armor simply could not be seen by people at that time.

And it makes me really curious about the little outlaw thoughts everyone in this room has right now. The little thoughts we glimpse now but that will be completely obvious to people in fifty years.

Do you know the most dangerous thought of all?

It is to think you know what you’re doing. Because once you think that you stop looking around for other, better ways of doing things, you never pause to give little outlaw thoughts a chance and you stop being open to alternative ways of thinking. You become blind.

We have to tell ourselves that we haven’t the faintest idea of what we’re doing. We, as a field, haven’t the faintest idea of what we’re doing. And we have to tell ourselves that everything around us was made up by people that were no smarter than us, so we can change, influence and build things that make a small dent in the universe.

And once we understand that, only then might we be able to do what the early fathers of computing dreamed about: To make humans better — with the help of computers.

Thank you.

Thanks to Nadine Bruder, Ryan L. Sink and Marian Edmunds for listening to me complain at length about all this stuff before someone gave me a podium.

Want more ideas like this in your inbox?

My letters are about long-lasting, sustainable change that fundamentally amplifies our human capabilities and raises our collective intelligence through generations. Would love to have you on board.

recommended reading

Below you can find a summary of works cited and used to build this talk based on many hours of reading and research. You can be sure that each one is fantastic and will be worth your time.

Roy Ascott. Is there Love in the Telematic Embrace?

James Burke. Admiral Shovel and the Toilet Roll.

David Byrne. Eliminating the Human.

Mike Caulfield. Information Underload.

Maciej Cegłowski. Build a Better Monster: Morality, Machine Learning, and Mass Surveillance, Superintelligence: The Idea That Eats Smart People, Deep-Fried Data.

Frank Chimero. The Web’s Grain.

Florian Cramer. Crapularity Hermeneutics.

Sebastian Deterding. Memento Product Mori: Of ethics in digital product design.

Nick Disabato. Cadence & Slang.

Douglas C. Engelbart. A Research Center for Augmenting Human Intellect (The Mother of all Demos), Augmenting Human Intellect: A Conceptual Framework, Improving Our Ability to Improve: A Call for Investment in a New Future.

Robert Epstein. How the internet flips elections and alters our thoughts.

Alex Feyerke. Step Off This Hurtling Machine.

Nick Foster. The Future Mundane.

Stephen Fry. The future of humanity and technology.

Robin Hanson. This AI Boom Will Also Bust.

Tristan Harris. How Technology Hijacks People’s Minds.

Werner Herzog. Lo and Behold, Reveries of the Connected World.

Steve Jobs. When We Invented the Personal Computer.

Alan C. Kay. Doing with Images Makes Symbols, How to Invent the Future, Is it really “Complex”? Or did we just make it “Complicated”?, Normal Considered Harmful, The Center of “Why?”, The Real Computer Revolution Hasn’t Happened Yet, The Computer Revolution Hasn’t Happened Yet, The Power Of The Context, User Interface: A Personal View, Vannevar Bush Symposium 1995.

Alan Kay & Adele Goldberg. Personal Dynamic Media.

Jeremy Keith. The Long Web, As We May Link.

Kevin Kelly. The Myth of a Superhuman AI.

Bruno Latour. Visualisation and Cognition: Drawing Things Together.

Robert C. Martin. Twenty-Five Zeros.

Ted Nelson. Computers for Cynics, Pernicious Computer Traditions.

Michael Nielsen. Thought as a Technology.

C.Z. Nnaemeka. The Unexotic Underclass.

Seymour Papert. Teaching Children Thinking.

Neil Postman. Amusing ourselves to death, Five Things We Need to Know About Technological Change, Technopoly, The Surrender of Culture to Technology.

Clay Shirky. The Shock of Inclusion.

daniel g. siegel. the lost medium.

Simon Sinek. What’s wrong with Millennials.

Ivan E. Sutherland. Sketchpad: A Man-Machine Graphical Communication System.

Bret Victor. A Brief Rant on the Future of Interaction Design, Media for Thinking the Unthinkable, The Future of Programming, The Humane Representation of Thought.

Audrey Watters. Driverless Ed-Tech: The History of the Future of Automation in Education, Teaching Machines and Turing Machines: The History of the Future of Labor and Learning, The Best Way to Predict the Future is to Issue a Press Release.

Mark Weiser. The Computer for the 21st Century.